Introduction

I am continuing a series of blog entries documenting how I set up my home server. Part 1 contains a description of what I am trying to accomplish and instructions for doing the initial install. In this part, I set up the MythTV Digital Video Recorder software. I’ve found this part particularly tedious, and the most frustrating part of setting up the server, since it requires hardware setup. This is one of those times where I strongly suggest you do a test install on your PC first, just to get the hang of it. I still don’t have a thorough understanding of all this, but was able to fumble my way through the setup.

My recording situation is probably not common. The first 100 or so cable channels are included with my apartment rent. The broadcast channels (i.e. those you can get with an antenna) are digital, but the rest of the channels are still analog – you need to get a cable box in order to get the digital versions. So, I still need both a digital and an analog tuner. I have two video capture cards installed:

- pcHDTV HD-5500 – This can tune both digital and analog channels, but not at the same time. It looks like the digital tuner can record more than one program at a time – I’m not sure the details about how this works.

- Hauppauge WinTV-HVR-1600 – This can tune digital and analog channels at the same time (it has two cable inputs, one for each). Unfortunately, I had problems with the digital tuner tuning properly, so I am not using it. On the positive side, the card has an MPEG-2 encoder built in for the analog signal, which means less load on my CPU.

I am setting up a headless server, so I will only install the MythTV backend. The videos will be watched on a separate PC, which can either use the MythTV frontend, or stream the video via MythWeb using a standard web browser and video player.

Here are some guides I used during the setup:

Testing the Capture Cards

Before I set up MythTV, I find it worthwhile to test the video capture cards. Some video capture cards have less than perfect Linux support (although I can see the developers have put a huge amount of work into developing drivers, the hardware is often closed source). Getting MythTV working and getting the hardware working are both frustrating enough when done separately, much less at the same time. Getting the video cards working outside MythTV also helped broaden my understanding of the hardware and drivers.

There are different types of capture cards which are summarized on MythTV’s capture card wiki page. I imagine that only a small subset of people receive analog broadcasts anymore. But an analog card could still be useful if you are trying to record from a VCR or camcorder,or if you are getting an analog signal from a cable box.

There are many programs out there for playing the video from the capture cards, but I use MPlayer, so let’s install it with this command:

sudo apt-get install mplayer

I also used MPlayer on my client PC to play back recorded files. Note that MPlayer has many options, and there are many other utility programs out there, so there are different ways to do the test. For example, you can tune your card using MPlayer, but I use an external program.

In order to do the tests via a remote connection, you will need to use SSH with X11 Forwarding enabled, as explained in Part 1. Keep in mind that the video will be slow and you will not get sound (I have read there are ways to get sound via SSH, but I haven’t tried yet).

You will be dealing with devices in the /dev folder. To figure out which device is which, you can use this command to query the specifications for that device (xxx is the device file):

udevadm info -a -p $(udevadm info -q path -n /dev/xxx)

You can also look at your boot log to see if a particular device was loaded:

dmesg

To look for a particular piece of hardware (in this example, the cx88 chipset):

dmesg | grep -i "cx88"

Generally, you will look for chipsets that are included on your capture card. As with all computer hardware, the chipset’s manufacturer/ model number will likely not be the same as the card’s manufacturer/model number. You will need to do some research to figure out which chipsets are used on your particular card.

Digital Tuner Card

I imagine most people recording TV will use a digital card, due to the cutover to digital broadcasts. There are different digital standards internationally – mine is using the United States standard. And there are different standards based on whether you receive your TV via cable (QAM) or via an antenna (ATSC). In my case, I am receiving via cable.

The digital video capture card devices can be found under /dev/dvb/adapterX, where X is an adapter number (you will have multiple adapters if you have multiple cards installed). I use the udevadm command line I gave earlier, running it against the /dev/dvb/adapterX/dvr0 device. I know my pcHDTV card when I see this output:

looking at parent device '/devices/pci0000:00/0000:00:0e.0/0000:02:0a.2':

KERNELS=="0000:02:0a.2"

SUBSYSTEMS=="pci"

DRIVERS=="cx88-mpeg driver manager"

ATTRS{vendor}=="0x14f1"

ATTRS{device}=="0x8802"

ATTRS{subsystem_vendor}=="0x7063"

ATTRS{subsystem_device}=="0x5500"

Let’s start testing the card(s):

- Install the utilties:

sudo apt-get install dvb-apps

- You first need to scan the channels using this command (replace the

X after the -a option with the adapter device number).

sudo scan -a X /usr/share/dvb/atsc/us-Cable-Standard-center-frequencies-QAM256 > ~/dvb_channels.conf

This takes a while, and it’s normal to get many “tuning failed” messages.

- I have the problem described on this wiki page. I’m not sure why it is (I imagine it has something to do with my cable provider), but my channel numbers are in brackets, and they repeat. so I need run the script on the wiki page:

perl -pe 's/^.{6}/++$i/e;' ~/dvb_channels.conf > ~/dvb_channels_fixed.conf

- If you look in the channels file you will see a number of lines like this:

1:189000000:QAM_256:0:441:10

with the first number being the channel number. In my case, these channel numbers are arbitrary since I ran the Perl script. And I don’t know enough to figure out how to tell which line corresponds to which channel number on my TV.

- Now you can start streaming the video from the card:

sudo mplayer /dev/dvb/adapterX/dvr0

- And then you can tune the card while I watch it. In fact, you probably won’t get any video until you tune to a valid channel. I use the

azap command (there are other commands you can use depending on the digital tuner type you have and what broadcast standard is used). This tunes in channel 1 from the channels config file (run this in a separate SSH session):

sudo azap -c ~/dvb_channels_fixed.conf -a X -r 1

The tuner will continue running, showing signal quality information. Ctrl-C out when finished.

- Many of the channels listed in the conf file did not work for me, so I went through the channels starting at channel 1 and moving up to find a working channel. Note that at one point, I received a message “ERROR: error while parsing modulation (syntax error)” – so I had to remove the line for that channel (I couldn’t tune to any channels after that one until I removed the line). Once I found a channel, I saved the line in its own conf file for future use. It’s a cumbersome way to do things, but I figured once I found a channel, I wouldn’t have to do it again.

- Once you find a good channel, you can capture it to a file:

sudo mplayer /dev/dvb/adapterX/dvr0 -dumpstream -dumpfile mydvbfile.ts

Again you can tune using a separate session. Ctrl-C when you are finished recording. And then you can transfer the file to your local PC, and watch it there (you will be able to hear sound).

Analog Hardware Encoder Card

The Hauppauge card I have has a built-in MPEG-2 encoder. This MPEG stream, which contains the audio as well as the video, can be accessed via a /dev/video device. The card has multiple video devices, which represent the different physical inputs on the back of the card. Let’s test the card(s):

- Install the utilities:

sudo apt-get install ivtv-utils

- Start playing the video stream in a window (

X is the device number):

sudo mplayer /dev/videoX

- And then you can tune the card while you watch it (keep in mind that the channel can take a while to chane in your window, since there is a long lag). This will change to channel 10, assuming a US cable frequency table. Run this command in a separate SSH session:

sudo ivtv-tune --device=/dev/videoX --freqtable=us-cable --channel=10

- You can now capture to a file:

sudo mplayer /dev/videoX -dumpstream -dumpfile ~/myanalogvideo.ts

Again you can tune using a separate session. Ctrl-C when you are finished recording. And then you can transfer the file to your local PC, and watch it there (you will be able to hear sound).

Analog Framebuffer Card

My pcHDTV card provides a video stream in unencoded form. You have three devices, one for video (/dev/videoX), one for video blanking (/dev/vbiX), and one for audio. The audio device used to be /dev/dspX, but you no longer have that device now that Ubuntu has stopped supporting the OSS sound drivers. Now, you need get the sound via the ALSA framework. You need to look at the cards registered with ALSA and figure out which one is yours (again, you will need to know what audio chipset your card is using):

cat /proc/asound/cards

I know my pcHDTV card when I see this line:

1 [CX8801 ]: CX88x - Conexant CX8801

Conexant CX8801 at 0xee000000

Let’s test the card(s):

- Install the required utilities:

sudo apt-get install ivtv-utils mencoder

- Start playing the video stream in a window (

X is the device number):

sudo mplayer tv:// -tv driver=v4l2:device=/dev/videoX

This is just showing the video stream, which does not include any sound, even if you could hear sound over your SSH session.

- Tune the card while you watch it. This will change to channel 10, assuming a US cable frequency table. Run this command in a separate SSH session:

sudo ivtv-tune --device=/dev/videoX --freqtable=us-cable --channel=10

- Let’s record the video and audio. This is more complicated than for a hardware encoder card, since you need to encode the video and audio yourself. In the

adevice part, substitute Y with the card number you got from /proc/asound/cards earlier (in my case, this is 1 based on the output I show above):

sudo mencoder tv:// -tv driver=v4l2:device=/dev/videoX:alsa=1:adevice=hw,Y.0 -oac pcm -ovc lavc -lavcopts vcodec=mpeg4:vpass=1 -o ~/test.avi

Again you can tune using a separate session.

- You can monitor the CPU usage of

mencoder. Encoding is a CPU intensive job, and this could be a concern with an older PC. I use this command to show the CPU usage of the most CPU-intensive processes, of which mencoder is the most intensive:

top

- Ctrl-C when you are finished recording. And then you can transfer the file to your local PC, and watch it there (you will be able to hear sound).

I don’t test the VBI device. As far as I can tell, this is useful because it contains extra data, such as closed captioning.

udev Rules

It is tedious to use device numbers to identify your devices. And there’s also the possibility that the numbers could change. You can use a udev rule file to set up sensibly named symlinks to your device files. For example, instead of using /dev/dvb/adapter1 to get at my pcHDTV card, I create a symlink called /dev/dvb/adapter_pchdtv. Basically, to make a rule, you need to get information from the udevadm command I gave earlier – you need to find information that uniquely identifies a particular card. Then you need to write the rules. Explaining how to write rules is way beyond the scope of this writeup. But there are many guides, including this one.

Here’s how to create the rule file:

sudo $EDITOR /etc/udev/rules.d/10-mythtv.rules- Here is a sample of what I put in my rule file:

# /etc/udev/rules.d/10-mythtv.rules

# *** PCHDTV Digital ***

SUBSYSTEM=="dvb", DRIVERS=="cx88-mpeg driver manager", ATTRS{subsystem_vendor}=="0x7063", ATTRS{subsystem_device}=="0x5500", PROGRAM="/bin/sh -c 'K=%k; K=$${K#dvb}; printf dvb/adapter_pchdtv/%%s $${K#*.}'", SYMLINK+="%c"

# *** PCHDTV Analog ***

#Note the brackets in ATTR{name} could not be matched because these

#signify a character range in the match string. Had to use * instead.

#Could not figure out how to escape the brackets.

SUBSYSTEM=="video4linux", ATTR{name}=="cx88* video (pcHDTV HD5500 HD*", DRIVERS=="cx8800", ATTRS{subsystem_vendor}=="0x7063", ATTRS{subsystem_device}=="0x5500", SYMLINK+="video_pchdtv"

SUBSYSTEM=="video4linux", ATTR{name}=="cx88* vbi (pcHDTV HD5500 HDTV*", DRIVERS=="cx8800", ATTRS{subsystem_vendor}=="0x7063", ATTRS{subsystem_device}=="0x5500", SYMLINK+="vbi_pchdtv"

# Note that you cannot match attributes from more than one parent at a time

# This line is no longer necessary, since we no longer have a dsp device.

#KERNEL=="dsp*", SUBSYSTEM=="sound", ATTRS{id}=="CX8801", SYMLINK+="dsp_pchdtv"

# *** Hauppauge Digital ***

SUBSYSTEM=="dvb", DRIVERS=="cx18", ATTRS{subsystem_vendor}=="0x0070", ATTRS{subsystem_device}=="0x7404", PROGRAM="/bin/sh -c 'K=%k; K=$${K#dvb}; printf dvb/adapter_hvr1600/%%s $${K#*.}'", SYMLINK+="%c"

# *** Hauppauge Analog ***

SUBSYSTEM=="video4linux", ATTR{name}=="cx18-0 encoder MPEG*", DRIVERS=="cx18", ATTRS{subsystem_vendor}=="0x0070", ATTRS{subsystem_device}=="0x7404", SYMLINK+="video_hvr1600"

- Once you save the file, you can do a test. You need to find your device(s) under the

/sys/class folder structure, which is a virtual filesystem where you can see raw device/driver information. Then run the following commands against those files. I give two examples, one for my analog card and one for my digital card:

sudo udevadm test /class/video4linux/video0

sudo udevadm test /class/dvb/dvb0.dvr0

The command should print out a bunch of information. Then when you look in your /dev folder you will see the new symlinks to your devices, as specified by the rules. And you can test out the new symlinks using MPlayer. If there’s a problem, you’ll have to continue to tweak your udev file. Once you have it working, I suggest you reboot and then recheck to make sure all the symlinks show up.

Installing MythTV

Finally, we can start installing MythTV. The MythTV wiki’s backend configuration guide came in useful for this part.

I like to install some of the more universally used packages separately.

- The first is the MySQL database:

sudo apt-get install mysql-server mysql-client

You will need to select a root password during the install.

- The second package is the Apache web server:

sudo apt-get install apache2

Now install MythTV:

-

sudo apt-get install mythtv-backend-master

- When asked “Will other computers run MythTV?”, I answer Yes. I don’t normally use a frontend, but would like to be prepped for one, and I also use the frontend initially for testing. It appears the way a frontend connects is to first log in to the MySQL database running on the backend, from which it gets the connect info for the MythTV daemon itself. Then, it logs into the MythTV daemon. Trying to open up the access later is a big hassle which requires granting more permissions in the database. On the other hand, this is a security risk. There is a way to tighten the MySQL database down so that only certain PCs can get into it, as outlined here. You can also do some security tightening using a firewall.

- I answer No when asked “Would you like to password-protect MythWeb?”. It would be more secure to have a password, but the last time I tried to do this, I found that video streaming clients do not handle passwords well. Ubuntu’s MythWeb guide has more information about setting up and protecting MythWeb.

- I answer No when asked “Will you be using this webserver exclusively with mythweb?”

I like to set up a separate partition for MythTV, although I still mount it in MythTV’s default folder: /var/lib/mythtv. I use the XFS filesystem as suggested here.

-

sudo apt-get install xfsprogs xfsdump

- Create the LVM partition as described in Part 1. I name the partition

mythtv. But instead of using mkfs.ext4, I use mkfs.xfs:

sudo mkfs.xfs /dev/mainvg/mythtv

- We need to mount the partition in a temporary area (we need to copy the MythTV directory structure into the new partition).

sudo mkdir /mnt/mythtvtmp

sudo mount -t xfs /dev/mapper/mainvg-mythtv /mnt/mythtvtmp

- Make sure the

/mnt/mythtvtmp folder has the permissions to match /var/lib/mythtv:

ls -ld /var/lib/mythtv

ls -ld /mnt/mythtvtmp

- Copy the directory structure from MythTV’s default folder. I set the rsync options to copy pretty much everything.

sudo rsync -avHAXS /var/lib/mythtv/ /mnt/mythtvtmp

Then verify the folders are in their new location.

ls /mnt/mythtvtmp

- Now remove the existing folder structure:

sudo rm -r /var/lib/mythtv/*

- Unmount the partition from its temporary location:

sudo umount /mnt/mythtvtmp

sudo rmdir /mnt/mythtvtmp

-

sudo $EDITOR /etc/fstab

Add this line (replace the <tab>’s with actual tabs):

/dev/mapper/mainvg-mythtv<tab>/var/lib/mythtv<tab>xfs<tab>defaults<tab>0<tab>0

And mount it:

sudo mount /var/lib/mythtv

Configuring MythTV

I am assuming you have an account with Schedules Direct, from which MythTV can get it’s TV schedule. You have to pay a monthly fee. I’m not sure if there are any other legitimate sites, but I find Schedules Direct well worth the cost.

Start the setup program:

-

sudo mythtv-setup

- Answer Yes when asked “Would you like to automatically be added to the group?”

- Answer Yes at the “Save all work and then press OK to restart your session” prompt.

- Answer OK at the “Please manually log out of your session for the changes to take effect” prompt.

- Log out and back in again.

-

sudo mythtv-setup

- Answer Yes when asked “Is it OK to close any currently running mythbackend processes?”

- You should get a graphical set up window.

General Setup

- Select “1. General”.

- I leave the IP address as the address of the server. If no other PCs will run a front end, then you can make it

127.0.0.1 .

- Select a Security PIN.

- Next.

- Next.

- Uncheck “Delete files slowly”. You shouldn’t need this for an XFS filesystem.

- Hit Next until you are on the last screen. Check the “Automatically run mythfilldatabase” checkbox. mythfilldatabase is what downloads the listings from Schedule Direct.

- Finish.

Capture Card Setup

- Select “2. Capture Card”.

- Create a “New capture card” for each card you have. Remember to use the symlink devices that you created earlier.

For a DVB card use the following settings:

- Card type:

DVB DTV capture card

- DVB device number:

/dev/dvb/adapterXXX/frontend0

For an analog hardware encoder card use these settings:

- Card type:

IVTV MPEG-2 encoder card

- Video device:

/dev/videoXXX

- Default input: Select an input – I use

Tuner 1

For an analog framebuffer card, use these settings:

- Video device:

/dev/videoXXX

- VBI device:

/dev/vbiYYY

- Audio device (

X is the ALSA device number): ALSA:hw:X,0

- Force audio sampling rate: I set this to

48000 (this is recommended for the pcHDTV analog card). Otherwise you can leave it set to None.

- Default input: Select your input – I use

Television

For my pcHDTV card, I cannot record from the digital and the analog tuners at the same time. When setting up the pcHDTV digital tuner, I need to go into “Recording Options”, and make sure the following are set properly:

- “Open DVB card on demand” should be checked.

- “Use DVB card for active EIT scan” should be unchecked.

Otherwise, when I am using the analog tuner, I start getting static.

If you are running in a VM:

- Put video files somewhere on your server (I put them in my home folder). I used the

.ts files I captured on real hardware during testing.

- When adding the capture card, select a card type of

Import test recorder.

- Tner the file’s path.

Video Source Setup

Before you do this, you will need to have your lineups set up inside Schedules Direct. I have one lineup for the digital channels, and one for the analog channels. Make sure to edit the lineups to remove any bogus channels that you don’t actually have.

- Select “3. Video sources”.

- Create a “New video source” for each lineup.

- I name my video sources “Analog” and “Digital” based on the lineup types.

- I keep the Listings grabber set to North America (SchedulesDirect.org).

- Enter your Schedules Direct login information.

- “Retrieve Lineups”

- In Data Direct Lineup, select the right lineup.

- Set the “Channel frequency table”. You don’t need to if it is the same as the one in General settings, but I always set it anyway. For the Digital video source, I set it to

us-bcast, and for my Analog video source, I set it to us-cable.

Input Connections

The first time you set up MythTV on your server, it is probably best to set up one input connection at a time. Give one connection a try, then remove that connection, and add the next one. Fortunately, MythTV will remember the fetched/scanned channels when you re-create a connection that you previously removed.

- Select “4. Input connections”.

- Map each card/input to a video source. Note that analog cards will have multiple inputs to select. If you don’t use a particular input, leave it mapped to

None.

To map a card/input, first select it, and then select a video source.

For an analog card/input, I don’t need to scan channels. I just use the channels form the lineup by selecting “Fetch channels from listing source”.

For a digital card:

- Select “Scan for channels” (it appears the scan is mandatory).

- In my case, I select a frequency table of “Cable” and leave the defaults for the rest.

- Select Next.

- Now wait while the channels are scanned. This takes a while. It should filter out any channels that are scrambled.

- I get multiple prompts regarding non-conflicting channels. I choose to “Insert all” non-conflicting channels

- When I get conflicting channels, I update them (I pick an arbitrary high channel number). I assume these channels will not work and I’ll probably end up deleting these later, but I am more comfortable keeping them for now.

- Finish.

- I do not select to “Fetch channels from listing source”.

- Select a starting channel.

- Next.

- Finish.

Again, for the pcHDTV card, I need to prevent it from record from the analog and digital sources at the same time. I need to do the following:

- When inside the Input Connections screen, select “Create a New Input Group”.

- For both the digital and analog tuners, specify this group in the “Input group 1” field. With both cards in the same group, MythTV does not try to use them at the same time.

I have two analog tuners, but I always want my Hauppauge hardware encoder tuner to take precedence – I only want to use the pcHDTV framebuffer tuner if the Hauppauge tuner is busy. This is because the pcHDTV tuner requires more CPU resources, and also because the video that MythTV encodes from the pcHDTV card less flexible than the MPEG stream from the Hauppauge card (see below for more details). To do this, I add the Hauppauge card first when I am setting up the Input Connections. This gives it a lower ID in the database, which means MythTV uses it first. Crude, but it seems to work.

Channel Setup

- Select “5. Channel Editor”.

- I get a bunch of channels without names when I do a digital scan, so I clean them up. Cleaning these can be tedious. You can wait until you get the frontend set up and then flip through in Live TV mode and see which ones work. But I’ve found that tuning to a bad channel can cause the frontend to crash or hang. I get some good channels that don’t show up in Schedule Directs listing, so the schedule isn’t reliable. And the channels that the scan finds don’t exactly match the ones my TV tunes to. I ended up just deleting any digital channels that don’t have a name. I figure if they don’t come up with a name, they are not of interest, and are more than likely “bad” channels.

- As far as the analog channelse go, I can add any analog channels that are missing from the schedule (since we didn’t do a scan, we will only have channels in the listing).

I had one odd situation: Schedules Direct shows a channel in my analog lineup, but it doesn’t show the channel in my digital lineup. In reality, they are the opposite – I have the digital channel, but not the analog version. To fix this, I manually added the digital channel, and then I set it’s XMLTV ID to the same value as the analog channel (I got the ID from Schedules Direct, and it also shows up in MythTV’s channel setup). This associates the schedule from the analog channel with the digital channel. I would like to remove the analog channel, but if I do that it will not download the schedule, which is needed for the digital channel. I need to look into it some more, but for now I just leave the analog channel and remember that I can’t use it…

Inside the Channel Editor, I also download icons for the channels:

- Select “Icon Download”

- Select “Download all icons…”

- The icons will be downloaded. For some channels, you will need to select which icon is the correct on, or you can skip if none are correct (or none are shown). This takes a while.

Finishing the Setup

Now you can exit the setup program by hitting the Escape key. Answer Yes when asked “Would you like to run mythfilldatabase?” This will load the schedules to your PC.

Managing MythTV

Later on, if you need to stop or start the MythTV backend, use these commands:

sudo stop mythtv-backend

sudo start mythtv-backend

If you want manually do a schedule update:

sudo mythfilldatabase

Using MythTV

Like setting up the backend, setting up a frontend to watch the video can be difficuly. You have several different options, which I explain here.

MythTV Frontend

This is MythTV’s official frontend. Even though I don’t plan on using it over the long-term, I still find it usefult to test and troubleshoot my backend setup. I install the frontend on another Ubuntu PC (the package is mythtv-frontend). At some point, I may look into setting it up on a Windows PC, which is possible, but appears to be a more difficult process.

Before trying MythTV, I try connecting with MySQL, since this is the first thing that MythTV does.

- Get the database login info. On the server, look at the MySQL config file:

sudo cat /etc/mythtv/mysql.txt

- Then run this command on a client machine (I assume you are on an Ubuntu machine and have the

mysql-client package installed):

mysql -u mythtv -h serveripaddress -p mythconverg

You will need to enter the password, at which time you should be logged in.

Now, try running the frontend. Inside Watch TV, use these keyboard commands. You can switch cards/inputs by pressing “Y” and “C”. But if you have multiple cards of the same type (e.g. if you have two digital input cards), it doesn’t seem to allow you to switch between the two of them so that you can test them both. A trick I’ve used to switch to a different card is to press “R” so that it will start recording, and then change to a different channel, and it will start using your other card. I’ve found the frontend flaky, though, and sometimes I can’t manually stop the recording… I have to cancel it in the recording area, but then sometimes I have to restart MythTV backend to really make it stop recording.

MythWeb

To access the MythWeb page, go to http://ipaddress/mythweb. I find the web page to be more reliable then the frontend. And you don’t need to install anything on your local PC, as long as it has a media program that can stream video. But, the page is only useful for managing and watching recordings – you can’t watch live TV with it.

Make sure to check out the Backend Status tab, which can come in handy during troubleshooting.

I have an issue when I select a program to record – it shows an error and takes a long time for the details to come up. But after my first recording, the issue seems to go away. The problem is described in this thread. I haven’t had a chance to look into it yet…

I have trouble playing the streaming video with Windows Media Player. VLC media player seems like the most popular, although I had problems playing the .nuv formatted video that MythTV encodes from the pcHDTV “analog framebuffer” tuner (the digital tuner and the analog hardware encoder card both create MPEG videos, which seem to be better supported). Mplayer (I used the SMplayer frontend) works well with the .nuv videos, but it doesn’t appear to support the streaming video.

MythTV Player

I recently discovered the MythTV Player, which is a lightweight alternative to the MythTV frontend. And, it works well in Windows (MythTV frontend is supported on Windows, but the compile and setup process seemed intimidating). I have used version 0.7.0 of MythTV Player to play recorded videos, and so far it is working well (the user interface is a little glitchy, though). And, it plays all of my recordings, including the .nuv videos that VLC had trouble with. But, it appears to be only for watching video – it doesn’t look like you can use it to set up recordings, etc.. So, I still need to use MythWeb or the MythTV frontend for that part.

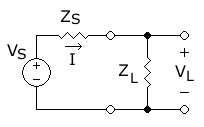

is the voltage source, which we assume to be a steady-state sinusoidal source.

is the source or internal impedance (with resistance

and reactance

).

is the load impedance (with resistance

and reactance

).

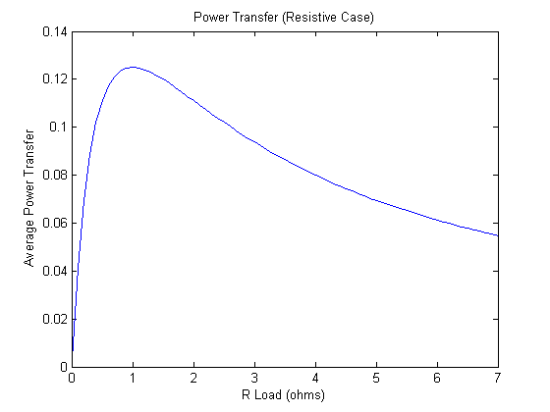

), the average load power as a function of load resistance (we assume the source voltage and load resistance are constants) is:

where

we can find the

that gives the maximum power by solving the equation

to get:

. We can plot a line to follow this maximum power:

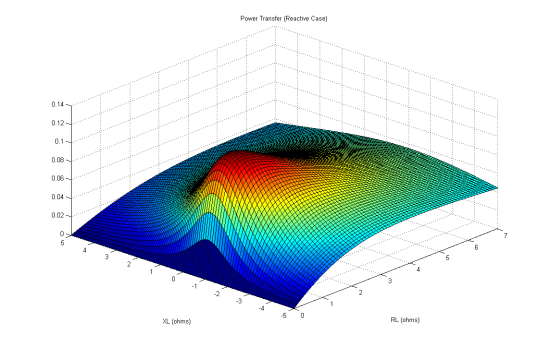

we can find the

that gives the maximum power by solving the equation

to get:

.